Improved Formatting for Bilingual Texts, and an Algorithm for its Creation

Bilingual books (ideally with audio) are very useful for language study.

Typically a text and it's translations are presented in side-by-side columns. When comparing a text against it’s translation, it’s not always easy to find the corresponding sentence in the other language.

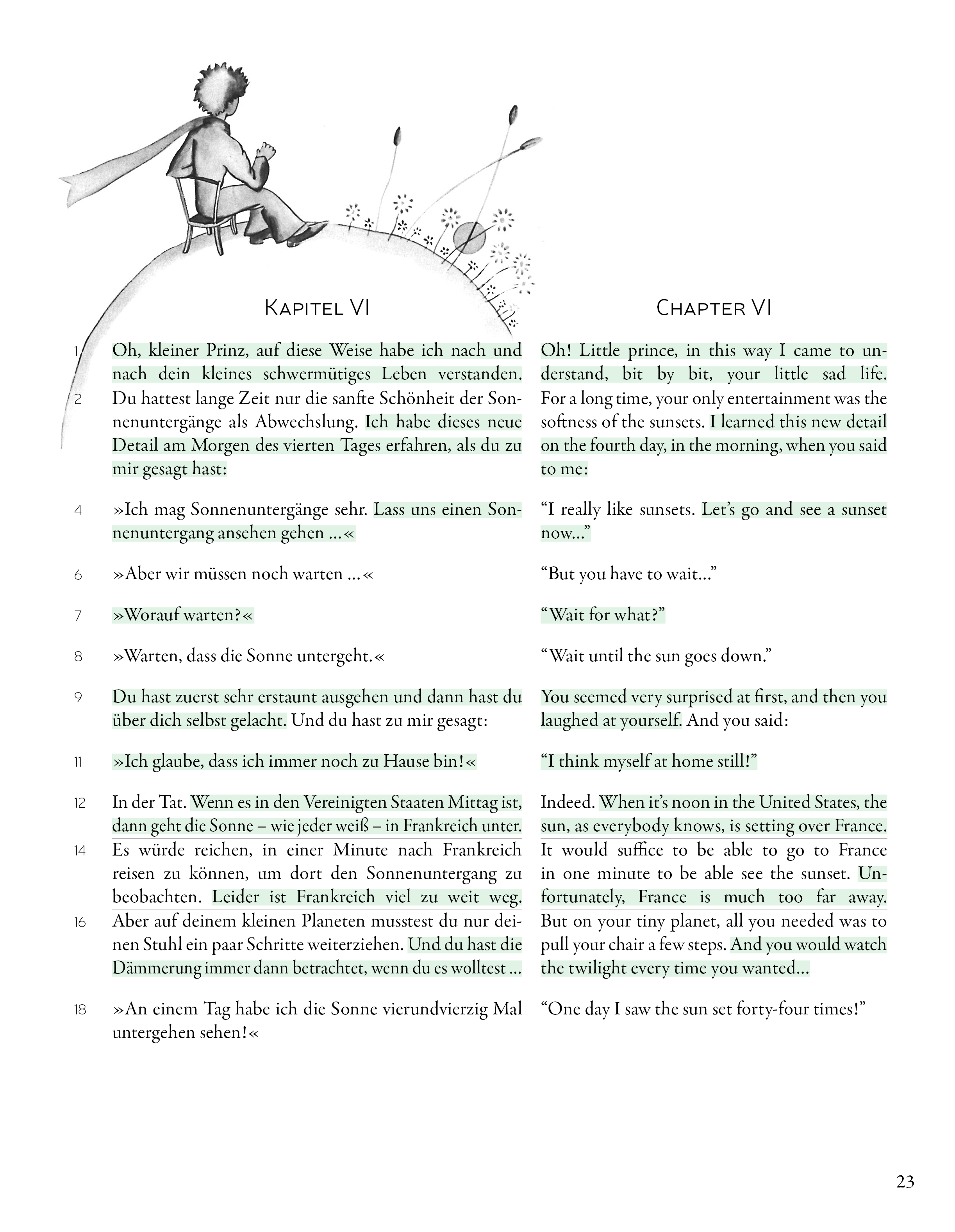

Here’s an example:

(You can read the second page of the text here if you are interested.)

Usually a paragraph and it's corresponding translation are arranged such that they start at the same vertical position on the page, but the two texts 'go out of sync' the further into a paragraph you go. If the reader is reading in one column/language, and referring frequently to the second language/column to support comprehension of what they are readying in the first, significant mental resources are required to continually locate the corresponding text in the second column, especially when paragraphs are longer.

I tried to see if I could come up with an improved formatting.

Without detailing all the different ideas and variations I tried, here's what I ended up with: (click the image to expand)

(A longer example here.)

Some notes, in no particular order:

- The overall appearance of the text is similar to that of a traditional book. The layout preserves the paragraph structure without addition symbols or conventions.

- Longer paragraphs are broken up into 'blocks', with faint lines between them. The content, and the vertical height of the starting and finishing points of these blocks in the two languages/columns, are the same. The task of locating the translation of any particular piece text in the other column/language is reduced from searching within a paragraph to searching within a 'block.'

- Within a block, alternating highlighting of text further helps to easily identify the corresponding text.

- Blocks only 'break' at sentence boundaries. That is to say, sentences are not split between blocks. The highlighting may switch part-way through a long sentence, if it is deemed too long for the student to 'digest' in one go. (Highlighting also shows how the audio is broken up into 'chunks.')

- This presentation unfortunately necessitates some adjustment of the spacing (inter-letter and inter-word) of the text to make sentences fit onto full lines. The German/English texts displayed above is a particularly challenging example. The German translation would typically occupy about 10% more space than the English on average if the columns had equal width, and the individual German and English sentences often vary greatly in length between each other. The algorithm, described below, finds the optimal arrangement of sentences into blocks that minimizes the spacing mutilation whilst keeping the blocks short. The column widths are also determined by the algorithm.

Algorithm

I hope the following is clear, although I'm aware it's a bit difficult to explain well in plain text, and I'm not sure I have succeeded.

The task of the algorithm is to break up a paragraph and it's corresponding translation into 'blocks.' That's to say, how to combine pairs of sentences (one in each language/column) into 'blocks.' We assume we are dealing with a faithful translation where the number and order of sentences in the translation matches those of the original text. With each sentence pair we place, we can either include it in the current 'block' (unless it's the first sentence pair in a paragraph), or start a new block. Two options.

We assign a 'badness' score to each block we create. This score consist of two components. One component is the 'looseness' score. This gets higher the longer the block is. If the blocks are too long, it makes it harder to find the corresponding text in the other language/column. This is bad. The other component is the 'ugliness' score, which is a function of how much we must stretch or squash the text in order to make it fit fully-justified into a fixed number of lines (a block). This makes typographists upset and is indeed unattractive and reduces legibility. We affix fudge factors to the two components and sum them to get the overall 'badness score' for a block. Finding the optimal layout for a paragraph (optimal tradeoff between between 'ugliness' and 'looseness' ..) is the question of finding the arrangement of blocks with the minimum sum of block 'badness' scores squared (or cubed.. pick a function). Squaring/cubing is a good idea, as it's preferable that all blocks have a reasonably low 'badness' score, rather than one block being awful and the others being good, a situation that otherwise wouldn't be penalised if we just sumed the 'block' scores.Let's say we have a long paragraph, 20-sentence-long. We have 2^19 different ways to arrange the sentence pairs into blocks, that's over half a million potential solutions to evaluate. Trying each arrangement to get the overall 'badness' score for the paragraph can be very time consuming, and perhaps unfeasible for very long paragraphs. However, we can use a dynamic programming/shortest path algorithm to cull the number of combinations. The approach I took is the same as that as is used in Knuth's Line Breaking Algorithm, only we are fitting sentences into 'blocks', and blocks into paragraphs, as opposed to words onto lines, and lines into paragraphs. His approach is described in his book 'Digital Typography', and in the paper 'Breaking paragraphs into lines' (Donald E. Knuth, Michael F. Plass - November 1981). Some python code examples here. It's very clever and reduces the problem from O(n ^ 2) to linear time.

We can run this algorithm over the entire book, and sum the total 'badness' for all the paragraphs. We can then vary the column widths (the proportion of the page each column occupies) in small increments, to find the column widths that result in the lowest overall 'badness' score for the book. In the example page given above, the German column is a good deal wider than the English. Overall I'm quite happy with the output of the program, it gives the student a kind of 'superpower' over the language being studied. I have some ideas for minor improvements though.

I know it's customary for Show HN posts to include the code, but frankly although it is functional with the right nudges, it is a mess that I didn't get around to cleaning up. It was continually modified and 'cludged' as I experimented with different approaches. The layout code is still attached to a complex, although vestigial and unnecessary GUI that can be used to align and edit translations, and creates an intermediate format that is imported into Indesign to create a PDF output. I hope to rewrite it sometime as a command-line program with it's own PDF rendering code and put it on github. I'm a bit hesitant though, as the code is used to create books that are currently my only source of income.

I hope you enjoyed reading about my project, and I'm always happy for an email, or interesting projects to work on. hobodrifterdavid at [the big G] dot com